Elasticsearch篇之倒排索引与分词

书与搜索引擎

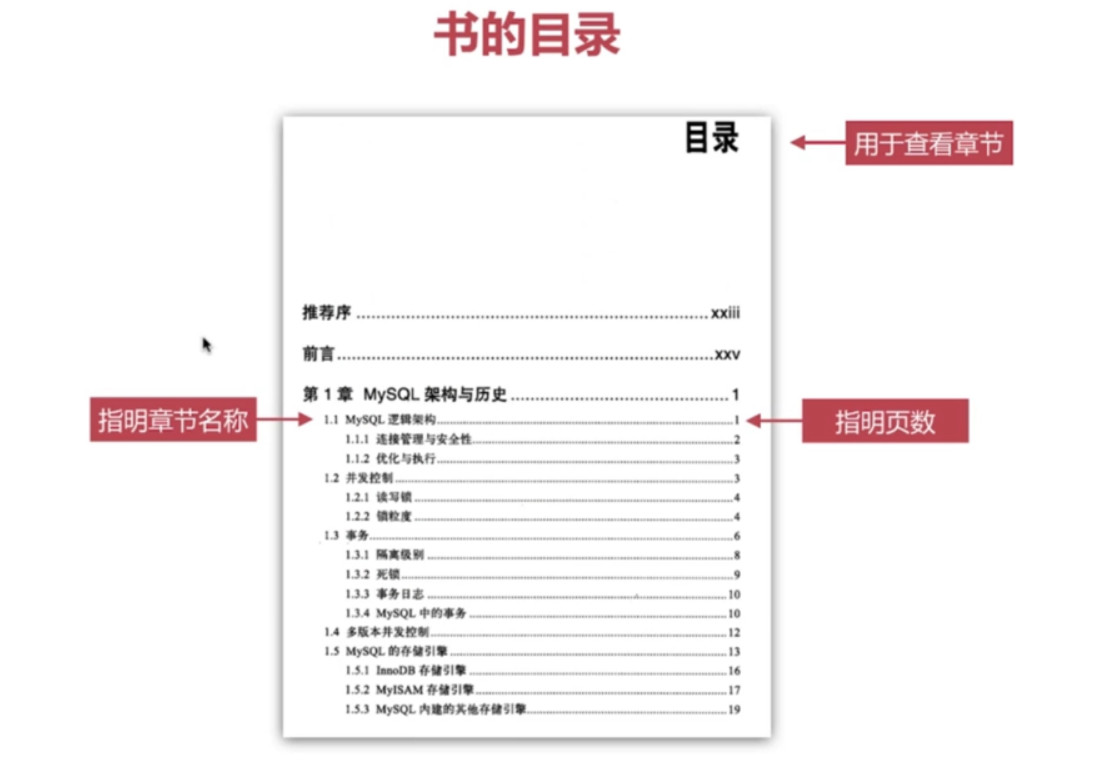

目录页对应正排索引

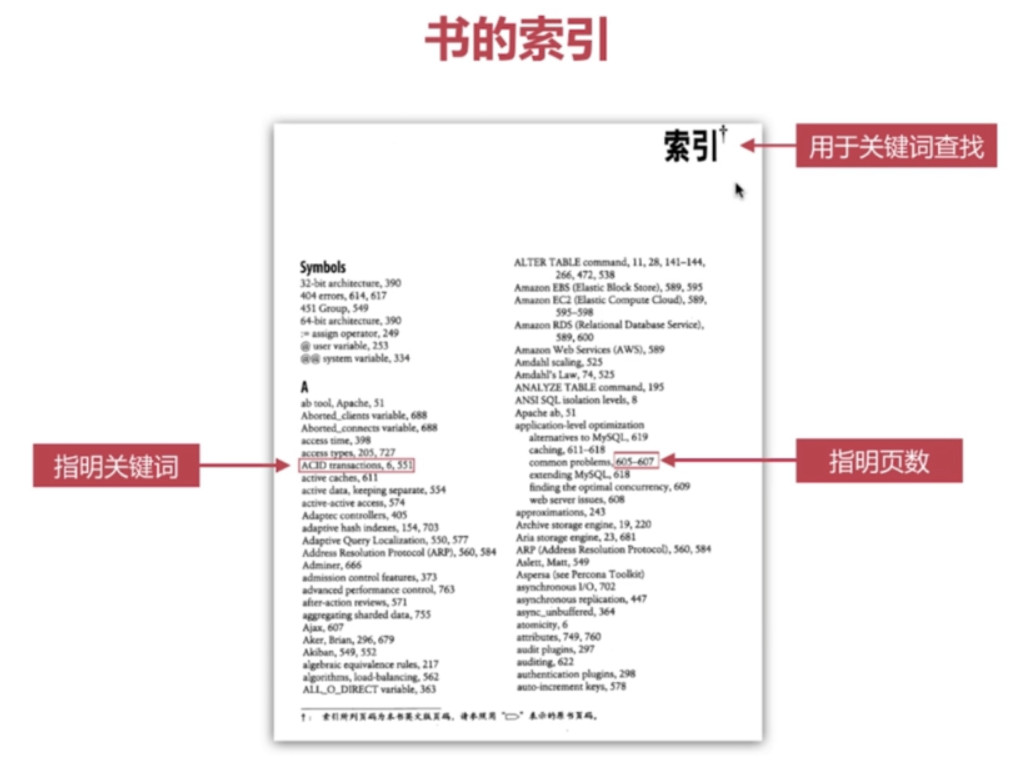

索引页对应倒排索引

搜索引擎

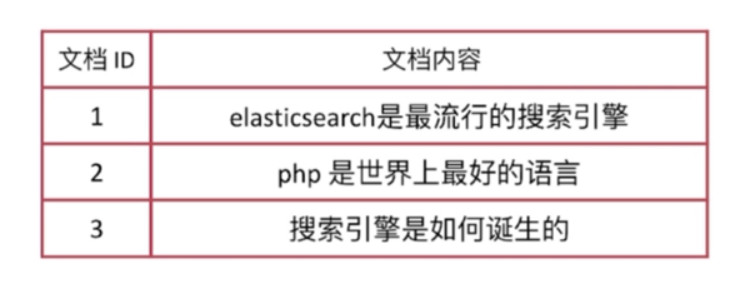

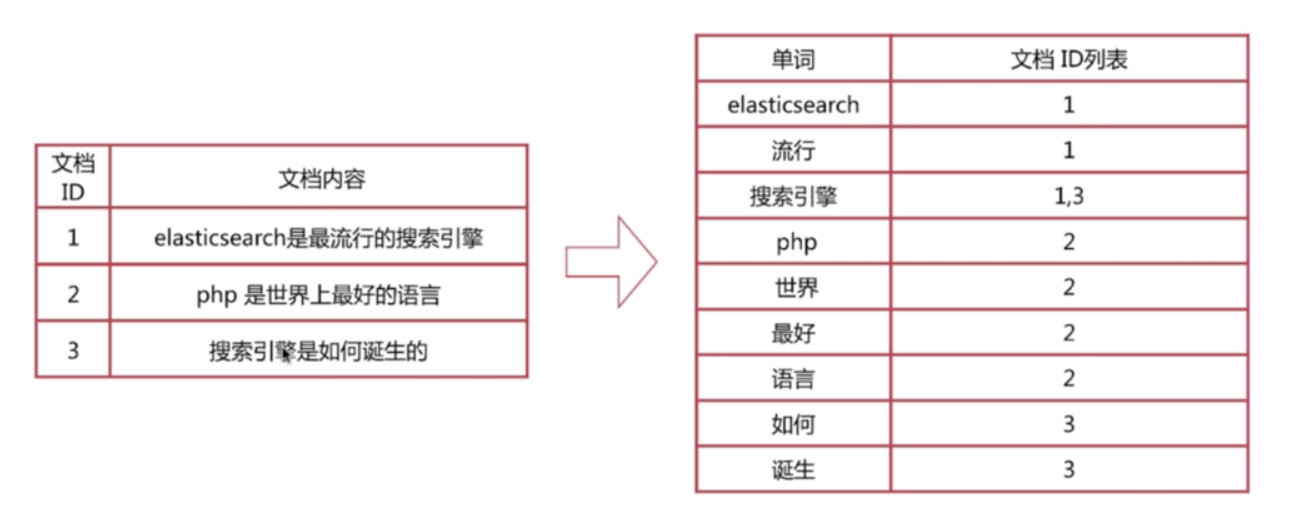

正排索引

文档Id到文档内容, 单词的关联关系

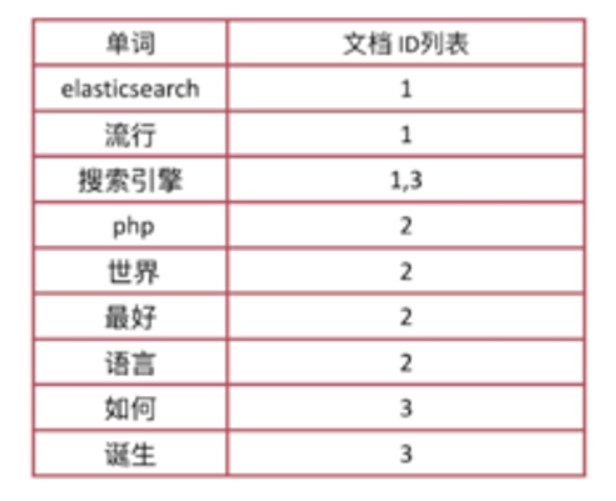

倒排索引

单词到文档Id的关联关系

搜索查询流程

结合正排索引和倒排索引实现搜索查询流程:

- 查询包含”搜索引擎”的文档

- 通过倒排索引获得”搜索引擎”对应的文档id 有 1 和 3

- 通过正排索引查询 1 和 3 的完整内容

- 返回用户最终结果

倒排索引组成

- 倒排索引是搜索引擎的核心, 主要包含两部分:

- 单词词典 (Term Dictionary)

- 倒排列表 (Posting List)

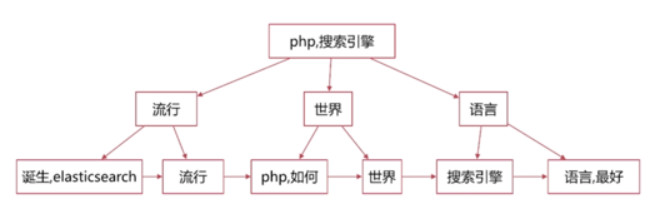

单词词典

单词词典(Term Dictionary)是倒排索引的重要组成

- 记录所有文档的单词, 一般都比较大

- 记录单词到倒排列表的关联信息

单词词典的实现一般是用B + Tree, 示例如下图:

下图排序采用拼音实现

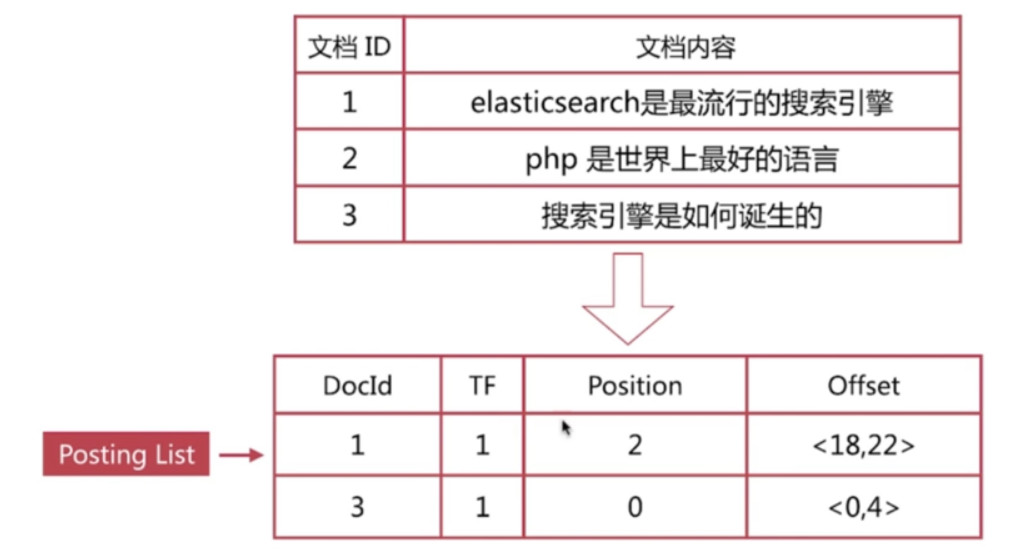

倒排列表

- 倒排列表 (Posting List) 记录了单词对应的文档集合, 由倒排索引项 (Posting) 组成

- 倒排索引项 (Posting) 主要包含如下信息:

- 文档Id, 用于获取原始信息

- 单词频率 (TF, Term Frequency), 记录该单词在文档中的出现次数, 用于后续相关性算分

- 位置 (Position), 记录单词在文档中的分词位置 (多个), 用于做词语搜索 (Phrase Query)

- 偏移 (Offset), 记录单词在文档的开始和结束位置, 用于做高亮显示

示例: 以”搜索引擎”为例

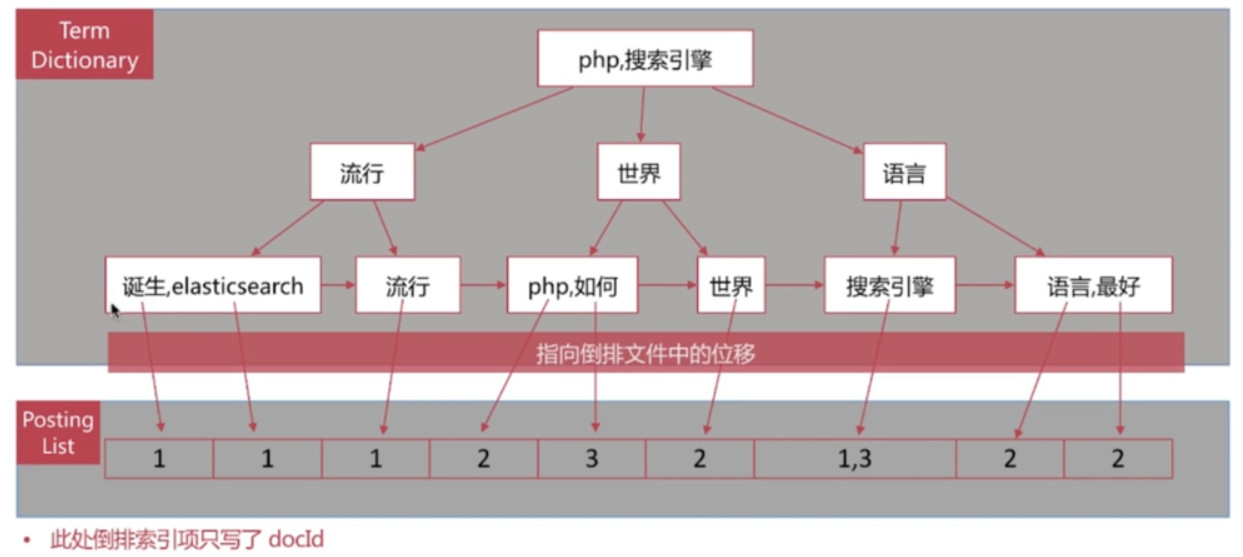

倒排索引

单词字典与倒排列表结合在一起的结构如下:

es存储的是一个json格式的文档, 其中包含多个字段, 每个字段会有自己的倒排索引, 类似下图:

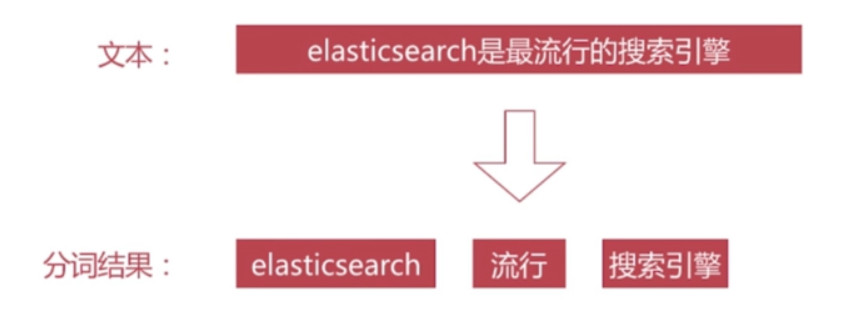

分词

分词是指将文本转换成一系列单词 (term or token)的过程, 也可以叫做文本分析, 在es里面称为Analysis, 如下图所示:

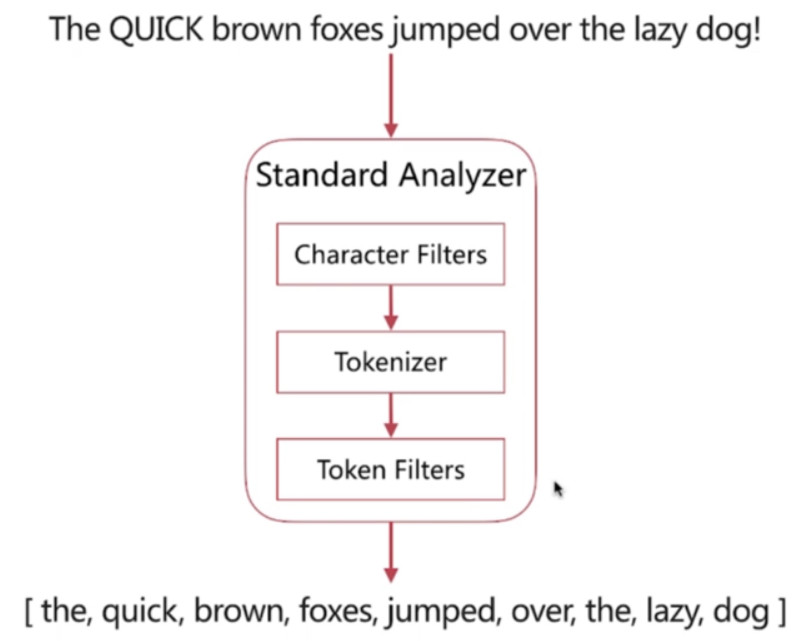

分词器

分词器是es中专门处理分词的组件, 英文为Analyzer, 它的组成如下:

Character Filters

针对原始文本进行处理, 比如去除HTML特殊标记符

Tokenizer (一个分词器只能有一个)

将原始文本按照一定的规则切分为单词

Token Filters

针对tokenizer处理的单词进行再加工, 比如转小写, 删除(Stop words), 或新增(近义词, 同义词)等处理

调用顺序

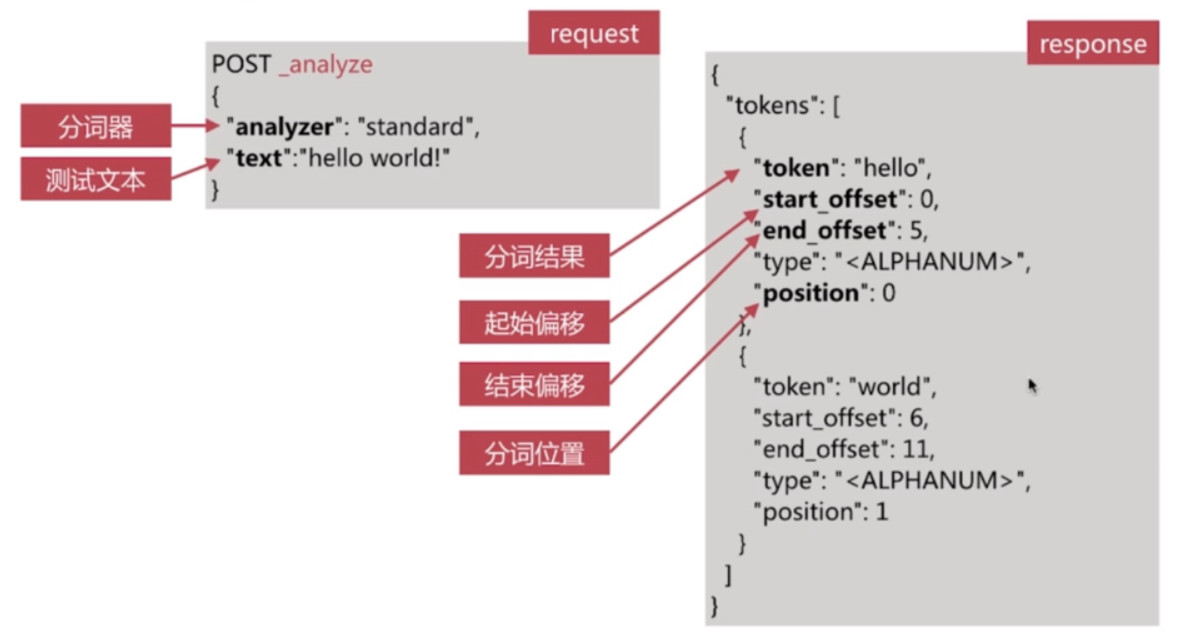

Analyze API

- es提供一个测试分词的api接口, 方便验证分词效果, endpoint是

_analyze- 可以直接指定analyzer进行测试

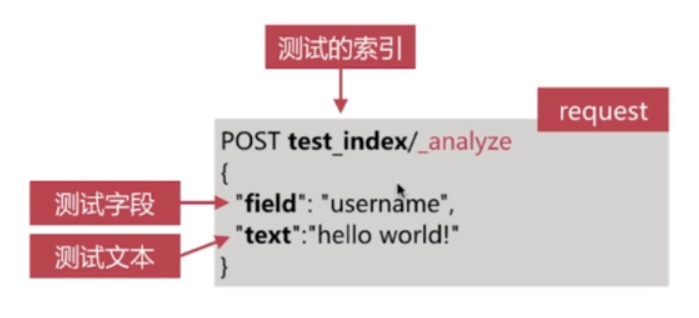

- 可以直接指定索引中的字段进行测试

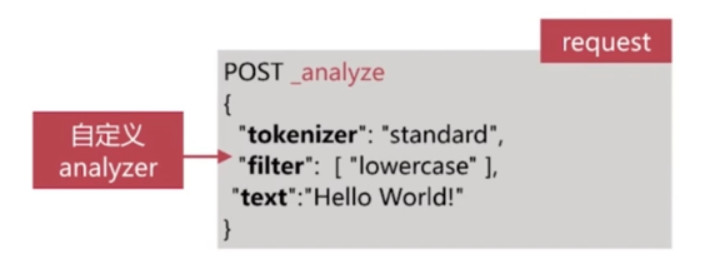

- 可以自定义分词器进行测试

直接指定analyzer进行测试

接口如下:

直接指定索引中的字段进行测试

接口如下:

自定义分词器进行测试

接口如下:

预定义的分词器

- es自带如下的分词器

- Standard

- Simple

- Whitespace

- Stop

- Keyword

- Pattern

- Language

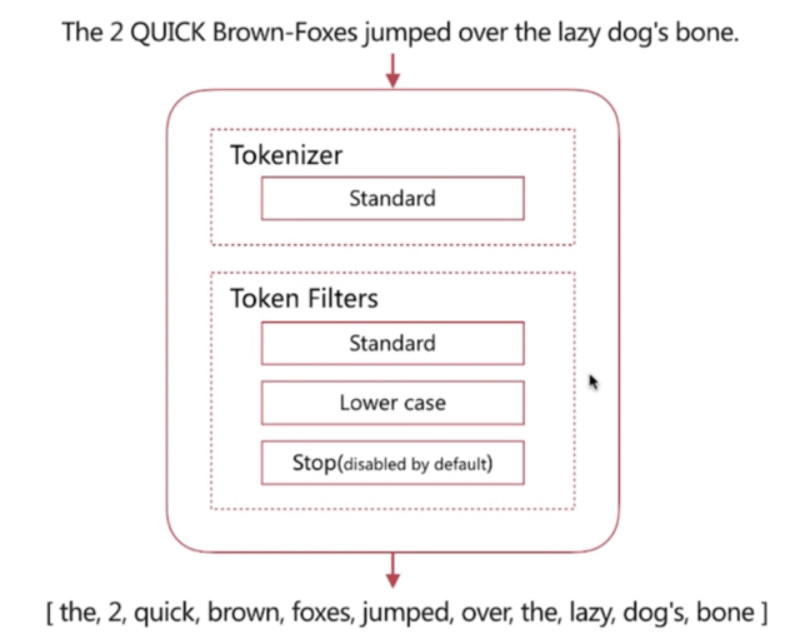

Standard Analyzer

默认分词器

其组成如图, 特性为:

- 按词切分, 支持多语言

- 小写处理

运行示例:

# request

POST /_analyze

{

"analyzer": "standard",

"text": ["The 2 QUICK Brown-Foxes jumped over the lazy dog's bone."]

}

# response

{

"tokens": [

{

"token": "the",

"start_offset": 0,

"end_offset": 3,

"type": "",

"position": 0

},

{

"token": "2",

"start_offset": 4,

"end_offset": 5,

"type": "",

"position": 1

},

{

"token": "quick",

"start_offset": 6,

"end_offset": 11,

"type": "",

"position": 2

},

{

"token": "brown",

"start_offset": 12,

"end_offset": 17,

"type": "",

"position": 3

},

{

"token": "foxes",

"start_offset": 18,

"end_offset": 23,

"type": "",

"position": 4

},

{

"token": "jumped",

"start_offset": 24,

"end_offset": 30,

"type": "",

"position": 5

},

{

"token": "over",

"start_offset": 31,

"end_offset": 35,

"type": "",

"position": 6

},

{

"token": "the",

"start_offset": 36,

"end_offset": 39,

"type": "",

"position": 7

},

{

"token": "lazy",

"start_offset": 40,

"end_offset": 44,

"type": "",

"position": 8

},

{

"token": "dog's",

"start_offset": 45,

"end_offset": 50,

"type": "",

"position": 9

},

{

"token": "bone",

"start_offset": 51,

"end_offset": 55,

"type": "",

"position": 10

}

]

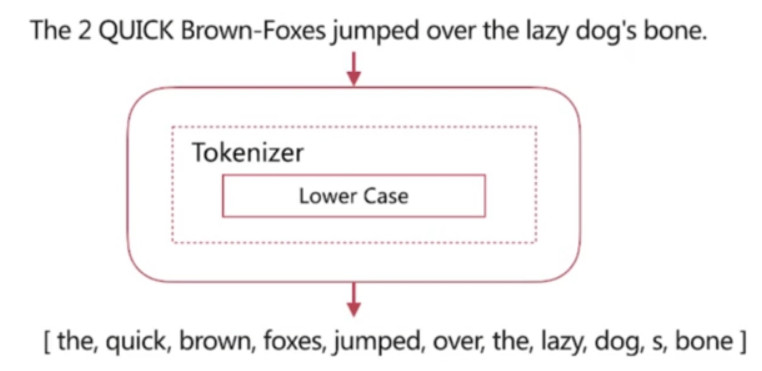

} Simple Analyzer

其组成如图, 特性为:

按照非字母切分

小写处理

运行示例:

# request

POST /_analyze

{

"analyzer": "simple",

"text": ["The 2 QUICK Brown-Foxes jumped over the lazy dog's bone."]

}

# response

{

"tokens": [

{

"token": "the",

"start_offset": 0,

"end_offset": 3,

"type": "word",

"position": 0

},

{

"token": "quick",

"start_offset": 6,

"end_offset": 11,

"type": "word",

"position": 1

},

{

"token": "brown",

"start_offset": 12,

"end_offset": 17,

"type": "word",

"position": 2

},

{

"token": "foxes",

"start_offset": 18,

"end_offset": 23,

"type": "word",

"position": 3

},

{

"token": "jumped",

"start_offset": 24,

"end_offset": 30,

"type": "word",

"position": 4

},

{

"token": "over",

"start_offset": 31,

"end_offset": 35,

"type": "word",

"position": 5

},

{

"token": "the",

"start_offset": 36,

"end_offset": 39,

"type": "word",

"position": 6

},

{

"token": "lazy",

"start_offset": 40,

"end_offset": 44,

"type": "word",

"position": 7

},

{

"token": "dog",

"start_offset": 45,

"end_offset": 48,

"type": "word",

"position": 8

},

{

"token": "s",

"start_offset": 49,

"end_offset": 50,

"type": "word",

"position": 9

},

{

"token": "bone",

"start_offset": 51,

"end_offset": 55,

"type": "word",

"position": 10

}

]

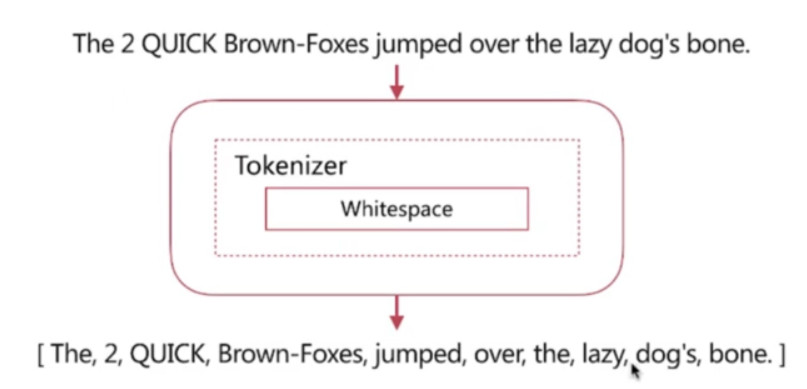

}Whitespace Analyzer

其组成如图, 特性为:

按照空格切分

运行示例:

# request

POST /_analyze

{

"analyzer": "whitespace",

"text": ["The 2 QUICK Brown-Foxes jumped over the lazy dog's bone."]

}

# response

{

"tokens": [

{

"token": "The",

"start_offset": 0,

"end_offset": 3,

"type": "word",

"position": 0

},

{

"token": "2",

"start_offset": 4,

"end_offset": 5,

"type": "word",

"position": 1

},

{

"token": "QUICK",

"start_offset": 6,

"end_offset": 11,

"type": "word",

"position": 2

},

{

"token": "Brown-Foxes",

"start_offset": 12,

"end_offset": 23,

"type": "word",

"position": 3

},

{

"token": "jumped",

"start_offset": 24,

"end_offset": 30,

"type": "word",

"position": 4

},

{

"token": "over",

"start_offset": 31,

"end_offset": 35,

"type": "word",

"position": 5

},

{

"token": "the",

"start_offset": 36,

"end_offset": 39,

"type": "word",

"position": 6

},

{

"token": "lazy",

"start_offset": 40,

"end_offset": 44,

"type": "word",

"position": 7

},

{

"token": "dog's",

"start_offset": 45,

"end_offset": 50,

"type": "word",

"position": 8

},

{

"token": "bone.",

"start_offset": 51,

"end_offset": 56,

"type": "word",

"position": 9

}

]

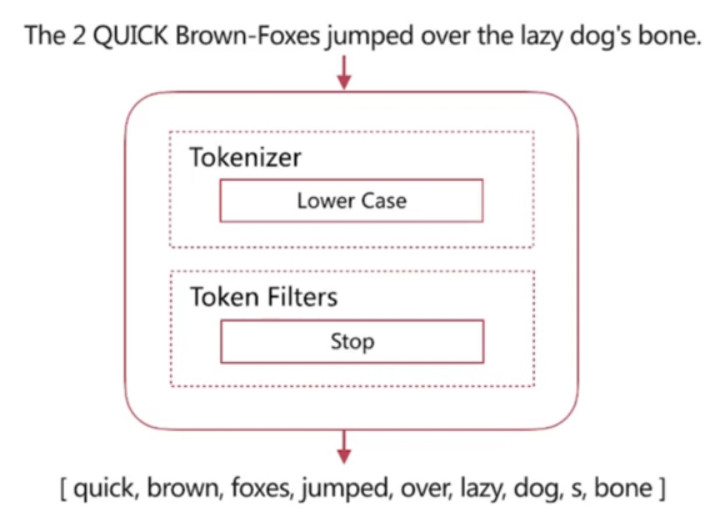

}Stop Analyzer

Stop Word指语气助词等修饰性的词语, 比如the, an, 的, 这等等

其组成如图, 特性为:

相比Simple Analyzer多了Stop World处理

运行示例:

# request

POST /_analyze

{

"analyzer": "stop",

"text": ["The 2 QUICK Brown-Foxes jumped over the lazy dog's bone."]

}

# response

{

"tokens": [

{

"token": "quick",

"start_offset": 6,

"end_offset": 11,

"type": "word",

"position": 1

},

{

"token": "brown",

"start_offset": 12,

"end_offset": 17,

"type": "word",

"position": 2

},

{

"token": "foxes",

"start_offset": 18,

"end_offset": 23,

"type": "word",

"position": 3

},

{

"token": "jumped",

"start_offset": 24,

"end_offset": 30,

"type": "word",

"position": 4

},

{

"token": "over",

"start_offset": 31,

"end_offset": 35,

"type": "word",

"position": 5

},

{

"token": "lazy",

"start_offset": 40,

"end_offset": 44,

"type": "word",

"position": 7

},

{

"token": "dog",

"start_offset": 45,

"end_offset": 48,

"type": "word",

"position": 8

},

{

"token": "s",

"start_offset": 49,

"end_offset": 50,

"type": "word",

"position": 9

},

{

"token": "bone",

"start_offset": 51,

"end_offset": 55,

"type": "word",

"position": 10

}

]

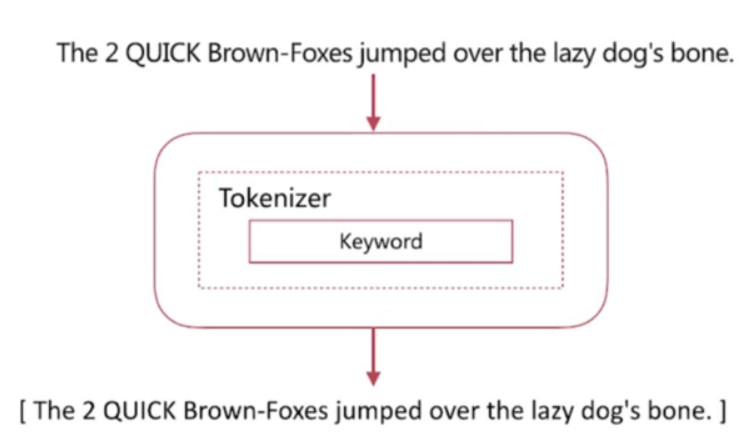

}Keyword Analyzer

其组成如图, 特性为:

不分词, 直接将输入作为一个单词输出

运行示例:

# request

POST /_analyze

{

"analyzer": "keyword",

"text": ["The 2 QUICK Brown-Foxes jumped over the lazy dog's bone."]

}

# response

{

"tokens": [

{

"token": "The 2 QUICK Brown-Foxes jumped over the lazy dog's bone.",

"start_offset": 0,

"end_offset": 56,

"type": "word",

"position": 0

}

]

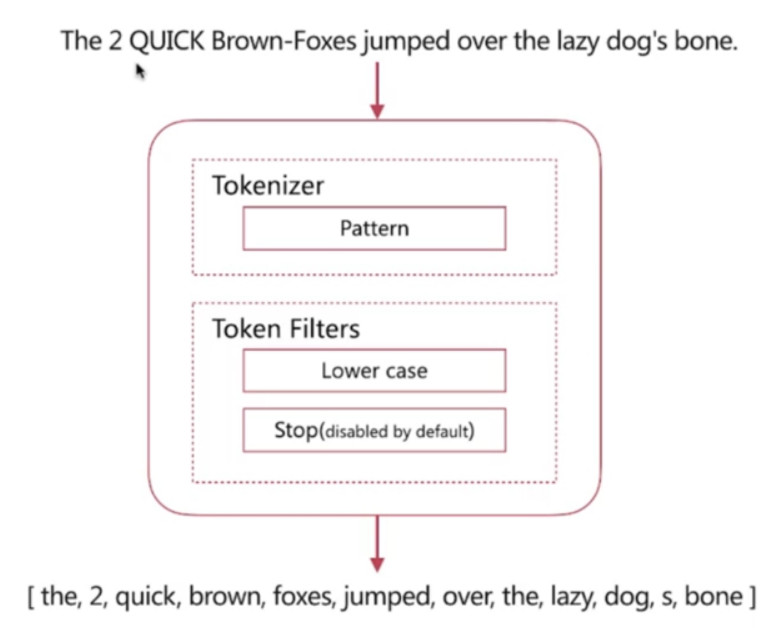

}Pattern Analyzer

其组成如图, 特性为:

通过正则表达式自定义分隔符

默认是\W+, 即非单词的符号作为分隔符

运行示例:

# request

POST /_analyze

{

"analyzer": "pattern",

"text": ["The 2 QUICK Brown-Foxes jumped over the lazy dog's bone."]

}

# response

{

"tokens": [

{

"token": "the",

"start_offset": 0,

"end_offset": 3,

"type": "word",

"position": 0

},

{

"token": "2",

"start_offset": 4,

"end_offset": 5,

"type": "word",

"position": 1

},

{

"token": "quick",

"start_offset": 6,

"end_offset": 11,

"type": "word",

"position": 2

},

{

"token": "brown",

"start_offset": 12,

"end_offset": 17,

"type": "word",

"position": 3

},

{

"token": "foxes",

"start_offset": 18,

"end_offset": 23,

"type": "word",

"position": 4

},

{

"token": "jumped",

"start_offset": 24,

"end_offset": 30,

"type": "word",

"position": 5

},

{

"token": "over",

"start_offset": 31,

"end_offset": 35,

"type": "word",

"position": 6

},

{

"token": "the",

"start_offset": 36,

"end_offset": 39,

"type": "word",

"position": 7

},

{

"token": "lazy",

"start_offset": 40,

"end_offset": 44,

"type": "word",

"position": 8

},

{

"token": "dog",

"start_offset": 45,

"end_offset": 48,

"type": "word",

"position": 9

},

{

"token": "s",

"start_offset": 49,

"end_offset": 50,

"type": "word",

"position": 10

},

{

"token": "bone",

"start_offset": 51,

"end_offset": 55,

"type": "word",

"position": 11

}

]

}Language Analyzer

- 提供了30+ 常见的分词器

- 支持的语言有: arabic, armenian, basque, bengali, brazilian, bulgarian, catalan, cjk, czech, danish, dutch, english, finnish, french, galician, german, greek, hindi, hungarian, indonesian, irish, italian, latvian, lithuanian, norwegian, persian, portuguese, romanian, russian, sorani, spanish, swedish, turkish, thai.

中文分词

- 难点

- 中文分词指的是将一个汉字序列切分为一个一个单独的词. 在英文中, 单词是以空格作为自然分界符, 汉语中词没有一个形式上的分界符.

- 上下文不同, 分词结果迥异, 比如交叉歧义问题, 比如下面两种分词都合理

- 乒乓球拍/卖/完了

- 乒乓球/拍卖/完了

常用分词系统

- IK

- 实现中英文单词的切分, 支持ik_smart, ik_maxword等模式

- 可自定义词库, 支持热更新分词词典

- Github地址: https://github.com/medcl/elasticsearch-analysis-ik

- jieba

- Python中最流行的分词系统, 支持分词和词性标注

- 支持繁体分词, 自定义词典, 并行分词等

- Github地址: https://github.com/sing1ee/elasticsearch-jieba-plugin

基于自然语言处理的分词系统

- Hanlp

- HanLP 是由一系列模型与算法组成的 Java 工具包,目标是普及自然语言处理在生产环境中的应用。

- Github地址: https://github.com/hankcs/HanLP

- THULAC

- THULAC(THU Lexical Analyzer for Chinese)由清华大学自然语言处理与社会人文计算实验室研制推出的一套中文词法分析工具包,具有中文分词和词性标注功能。

- Github地址: https://github.com/microbun/elasticsearch-thulac-plugin

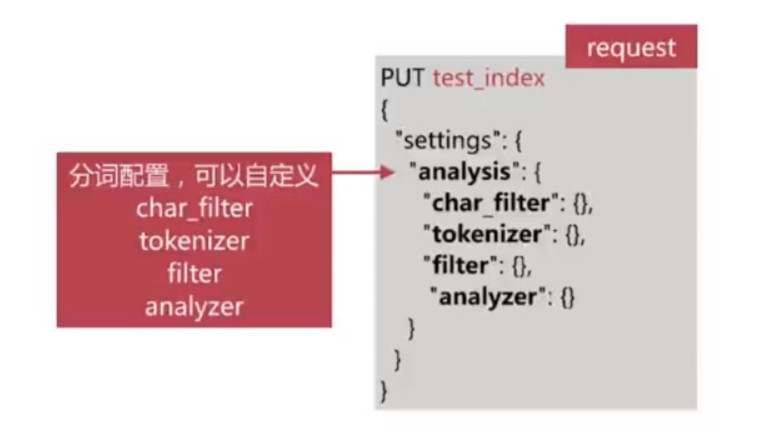

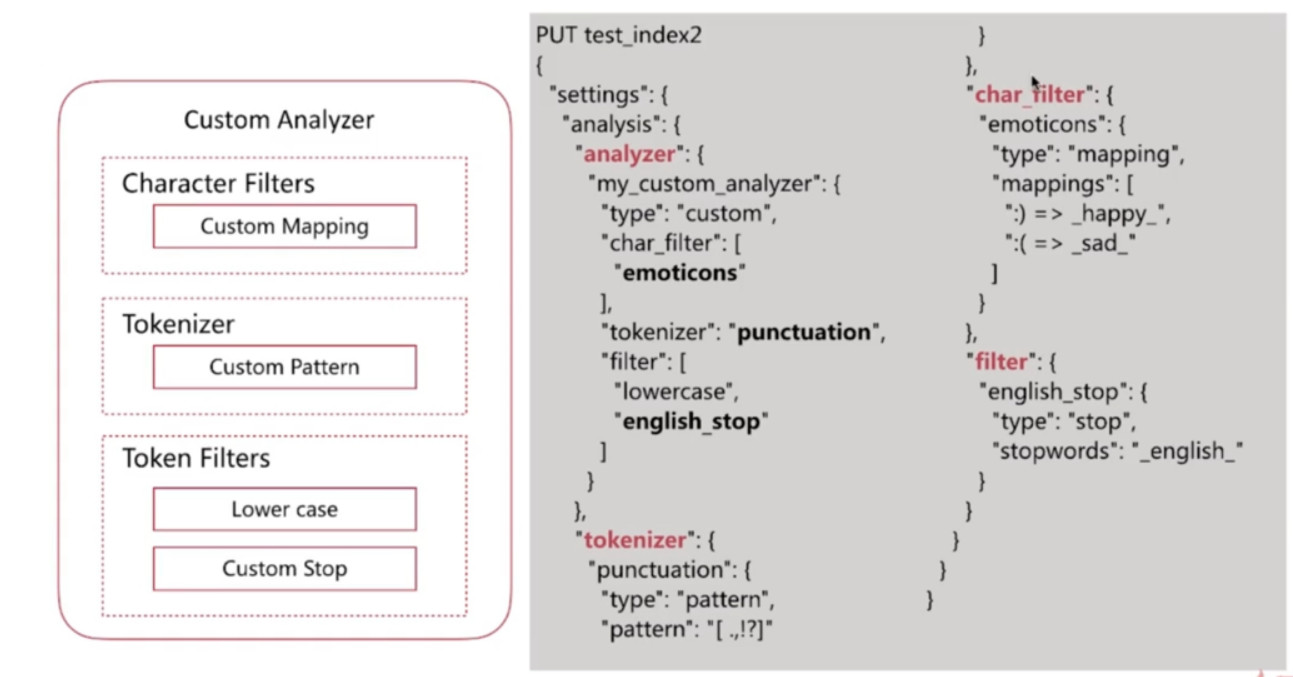

自定义分词

- 当自带的分词无法满足需求时, 可以自定义分词

- 通过自定义Character Filters, Tokenizer, Token Filter实现

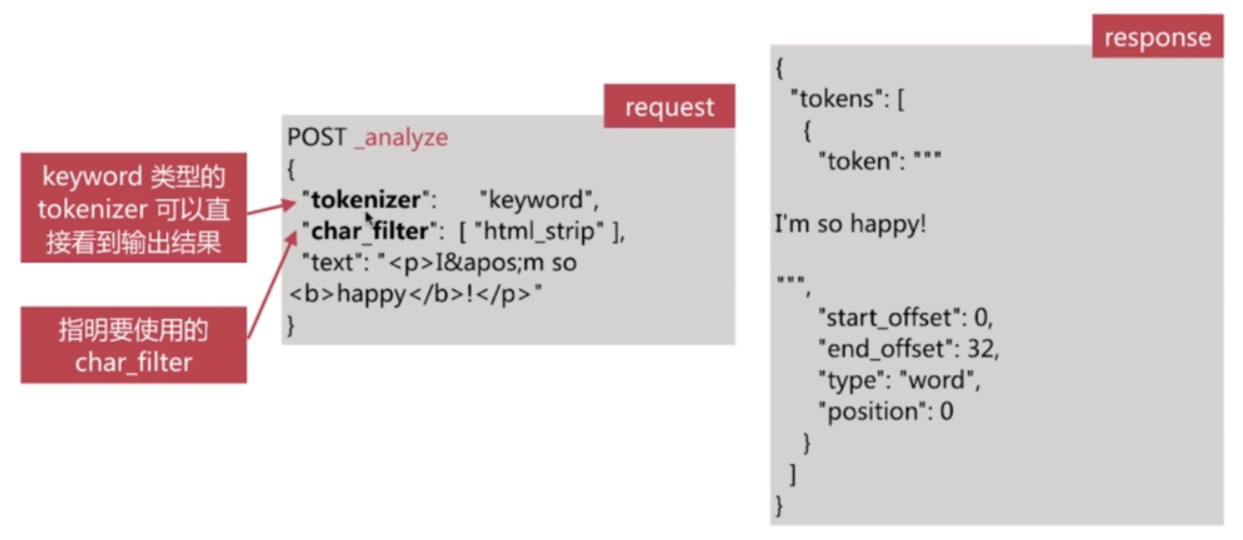

Character Filters

在Tokenizer之前对原始文本进行处理, 比如增加, 删除,或者替换字符等

自带的如下

- HTML Strip去除HTML标签和转换HTML实体

- Mapping进行字符替换操作

- Pattern Replace进行正则匹配替换

会影响后续Tokenizer解析的position和offset信息

Character Filters测试api

# request

POST _analyze

{

"tokenizer": "keyword",

"char_filter": ["html_strip"],

"text": ["I 'm so happy

!"]

}

# response

{

"tokens": [

{

"token": """

I 'm so happy

!

""",

"start_offset": 0,

"end_offset": 33,

"type": "word",

"position": 0

}

]

}Tokenizer

将原始文本按照一定规则切分为单词 (term or token)

自带的如下:

- standard 按照单词进行分割

- letter 按照非字符累进行分割

- whitespace 按照空格进行分割

- UAX URL Email 按照standard分割, 但不会分割邮箱和url

- NGram 和 Edge NGram连词分割 (可以用来做单词提示)

- Path Hierarchy按照文件路径进行切割

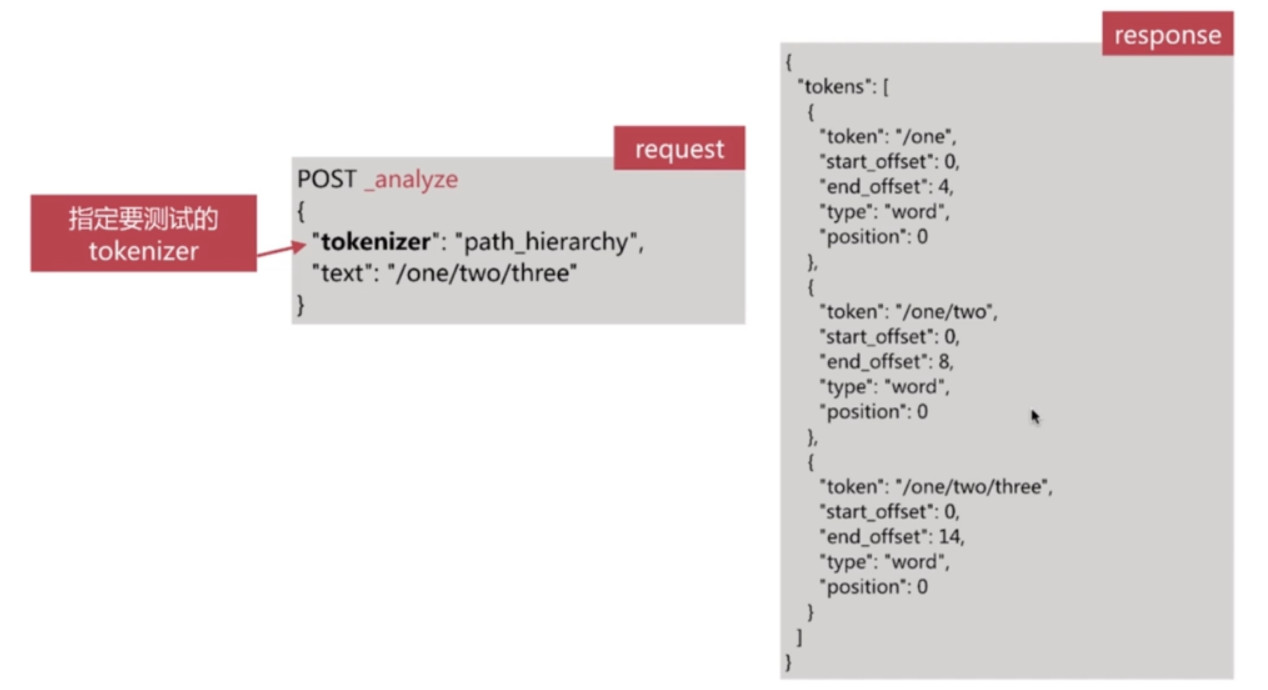

Tokenizer测试api

# request

POST /_analyze

{

"tokenizer": "path_hierarchy",

"text": ["/root/jiavg/data"]

}

# response

{

"tokens": [

{

"token": "/root",

"start_offset": 0,

"end_offset": 5,

"type": "word",

"position": 0

},

{

"token": "/root/jiavg",

"start_offset": 0,

"end_offset": 11,

"type": "word",

"position": 0

},

{

"token": "/root/jiavg/data",

"start_offset": 0,

"end_offset": 16,

"type": "word",

"position": 0

}

]

}Token Filters

对于Tokenizer输出的单词 (term) 进行增加, 删除, 修改等操作

自带的如下

- lowercase 将所有term转换为小写

- stop 删除 stop word

- NGram 和 Edge NGram 连词分割

- Synonym 添加近义词的term

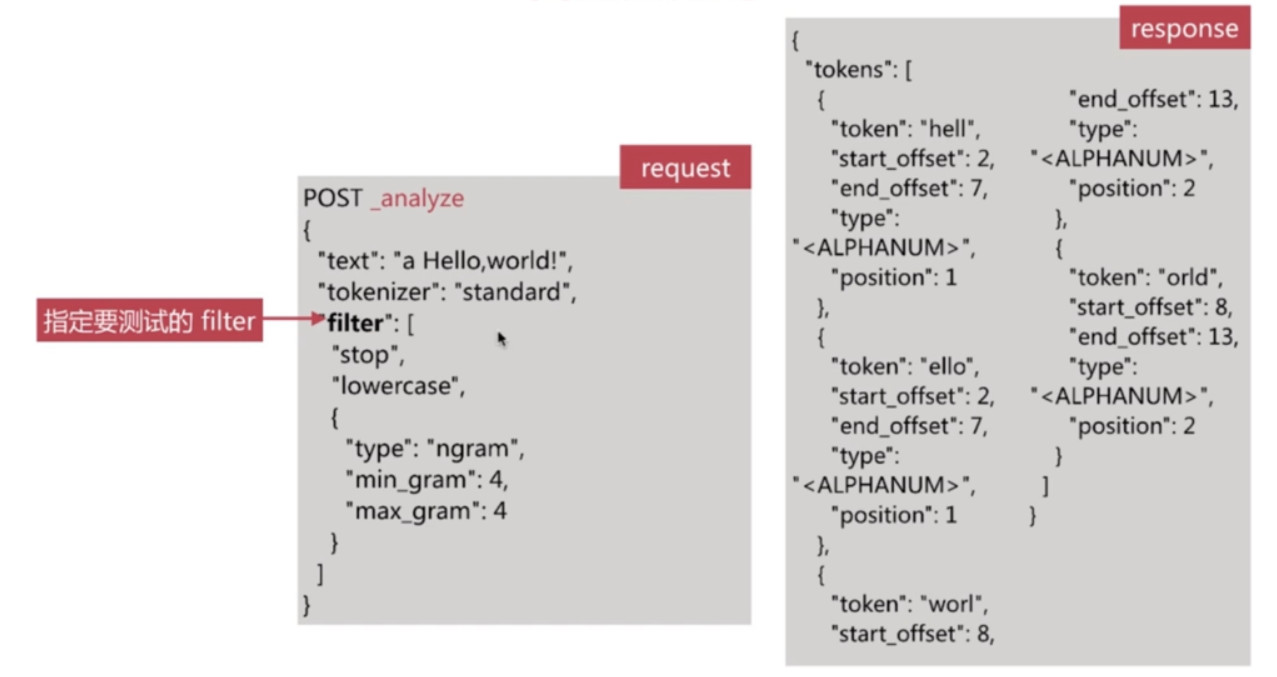

Filter测试api

# request

POST _analyze

{

"tokenizer": "standard",

"filter": [

"stop",

"lowercase",

{

"type": "ngram",

"max_gram": 4,

"min_gram": 4

}

],

"text": [

"a Hello Jiavg"

]

}

# response

{

"tokens": [

{

"token": "hell",

"start_offset": 2,

"end_offset": 7,

"type": "",

"position": 1

},

{

"token": "ello",

"start_offset": 2,

"end_offset": 7,

"type": "",

"position": 1

},

{

"token": "jiav",

"start_offset": 8,

"end_offset": 13,

"type": "",

"position": 2

},

{

"token": "iavg",

"start_offset": 8,

"end_offset": 13,

"type": "",

"position": 2

}

]

} 自定义分词API

自定义分词需要在索引的配置中设定, 如下所示:

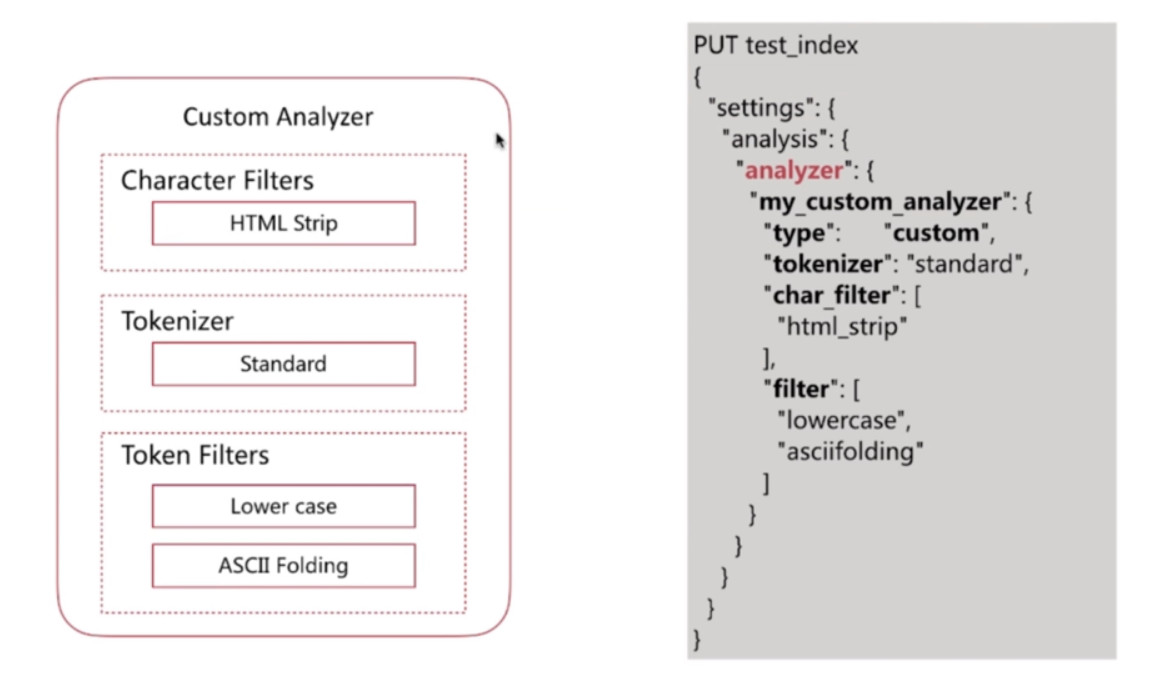

自定义分词示例

示例一:

# request

PUT test_index

{

"settings": {

"analysis": {

"analyzer": {

"my_analyzer": {

"type": "custom",

"char_filter": ["html_strip"],

"tokenizer": "standard",

"filter": ["lowercase", "asciifolding"]

}

}

}

}

}

# response

{

"acknowledged": true,

"shards_acknowledged": true,

"index": "test_index"

}示例二:

# request

PUT test_index2

{

"settings": {

"analysis": {

"analyzer": {

"my_analyzer2": {

"type": "custom",

"char_filter": [

"my_char_filter"

],

"tokenizer": "my_tokenizer",

"filter": [

"my_filter",

"lowercase"

]

}

},

"char_filter": {

"my_char_filter": {

"type": "mapping",

"mappings": [

"(- ^ -) => _口亨_",

"(- - -) => _哭唧唧_"

]

}

},

"tokenizer": {

"my_tokenizer": {

"type": "pattern",

"pattern": "[.,!?\\s]"

}

},

"filter": {

"my_filter": {

"type": "stop",

"stopwords": "_english_"

}

}

}

}

}

# response

{

"acknowledged": true,

"shards_acknowledged": true,

"index": "test_index2"

}

# request

POST test_index2/_analyze

{

"analyzer": "my_analyzer2",

"text": "(- ^ -), no li you, _哭唧唧_"

}

# response

{

"tokens": [

{

"token": "_口亨_",

"start_offset": 0,

"end_offset": 7,

"type": "word",

"position": 0

},

{

"token": "li",

"start_offset": 12,

"end_offset": 14,

"type": "word",

"position": 2

},

{

"token": "you",

"start_offset": 15,

"end_offset": 18,

"type": "word",

"position": 3

},

{

"token": "_哭唧唧_",

"start_offset": 20,

"end_offset": 25,

"type": "word",

"position": 4

}

]

}分词使用说明

- 分词会在如下两个时机使用:

- 创建或更新文档时 (Index Time), 会对应的文档进行分词处理

- 查询时 (Search Time), 会对查询语句进行分词

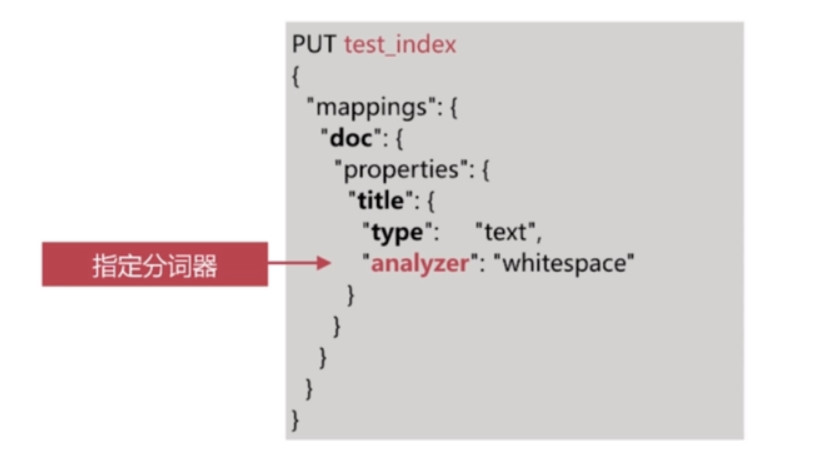

索引时分词

索引时分词是通过配置Index Mapping中每个字段的analyzer属性实现的,如下:

不指定分词时, 默认使用standard

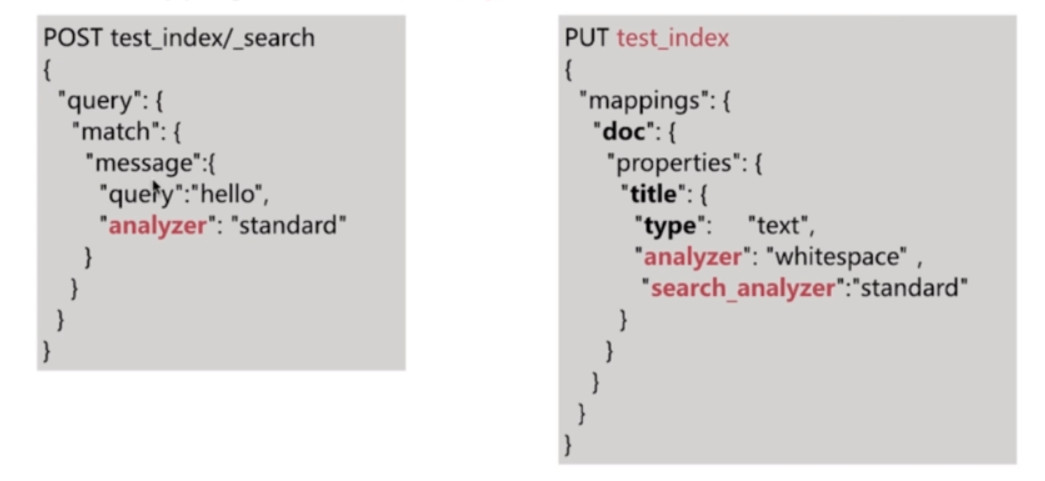

查询时分词

一般不需要特别指定查询分词器时, 直接使用索引时分词器即可, 否则会出现无法匹配的情况

查询时分词的指定方式有如下几种:

查询的时候通过analyzer指定分词器

通过index mapping设置search_analyzer实现

分词使用建议

- 明确字段是否需要分词, 不需要分词的字段就将type设置为keyword, 可以节省空间和提高写性能

- 善用

_analyzerAPI, 查看文档的具体分词结果 - 动手测试